AI-Powered Manufacturing Excellence

Transforming production lines with computer vision and predictive analytics. 60% defect reduction. 14-month ROI.

Read the Case StudyThe Future of Enterprise AI

Our latest research on implementing AI at scale. Strategies for 2025 and beyond.

Explore InsightsCustom Software That Scales

Purpose-built platforms for manufacturing, logistics, and enterprise operations.

View Our Work

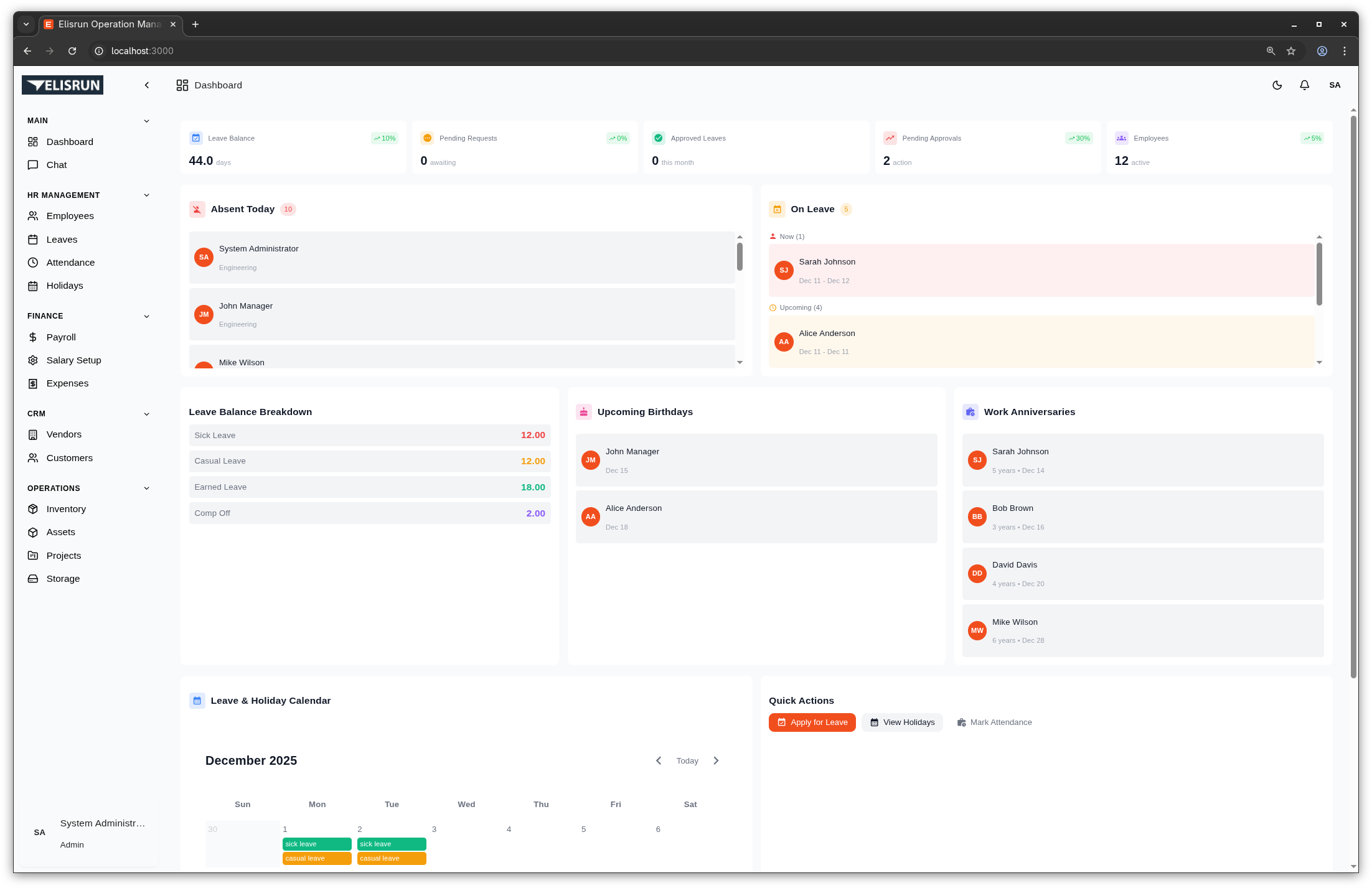

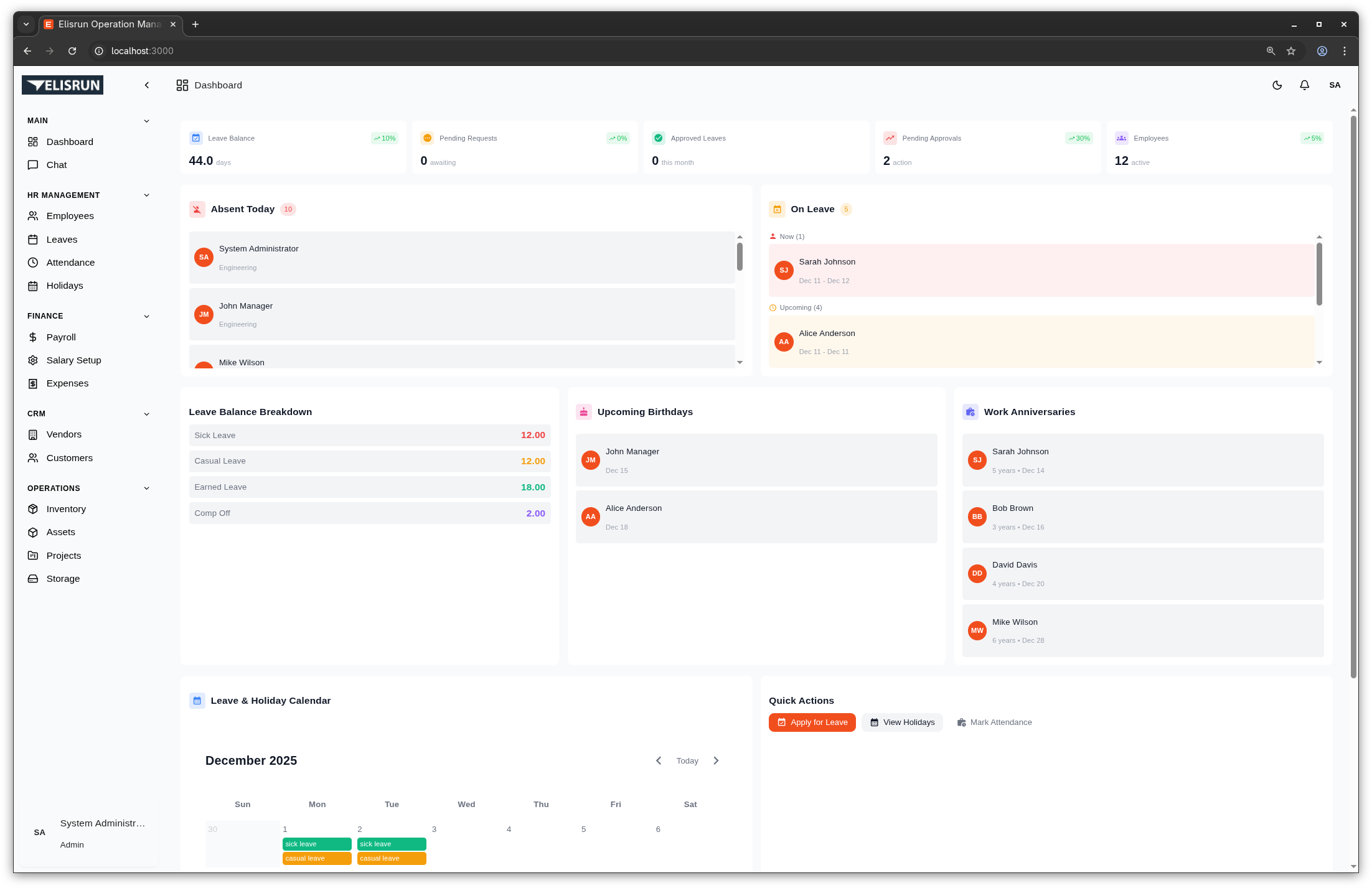

Elisrun: 60% Defect Reduction with Computer Vision

How we deployed AI-powered quality inspection in 12 weeks.

Read MoreAI in Manufacturing: 2025 Outlook

Key trends shaping Industry 4.0 and smart factory adoption.

Read MoreWhy We Stay Small

The boutique advantage: Senior architects on every project.

Read MoreTransforming Operations with Technology

We partner with manufacturing and enterprise teams to design and deploy systems that work at scale.

AI-Powered Quality Control

Reduce defects by 60% with computer vision inspection. Predictive maintenance that prevents downtime before it happens.

- Defect Detection

- Predictive Maintenance

- Production Optimization

Custom AI That Actually Ships

Production-ready ML models in 8-12 weeks. No research projects—real systems that solve real problems.

- Custom ML Models

- LLM Integration

- Process Automation

When Off-the-Shelf Fails You

Purpose-built platforms for operations that are too complex for generic software. Your workflow, not theirs.

- Operations Management

- Workflow Automation

- Data Platforms

Elisrun: AI-Powered Quality Inspection

Deployed computer vision-based quality inspection system that reduced defects by 60% and achieved full ROI payback in 14 months.

The Boutique Advantage

Large consultancies staff projects with junior developers learning on your timeline. We take a different approach.

Senior Architects Only

Every engagement led by engineers with production deployment experience.

No Handoffs

The architect you meet is the one building your system.

4-6 Clients at a Time

We stay selective to ensure founding-team attention on every project.

Trusted By Industry Leaders

Common Questions

What's your typical project timeline?

Most projects are delivered in 8-12 weeks. We scope aggressively and deploy iteratively—you'll see working software within the first 2-3 weeks.

How do you handle timezone differences?

We're available 6:00 AM - 12:00 PM EST (4:30 PM - 10:30 PM IST). We use async communication tools and provide daily updates. Most clients never notice the timezone gap.

What's your pricing model?

We offer both fixed-price and time & materials. Fixed-price works best for well-defined projects. T&M for ongoing development. Typical projects range from $10K to $100K+.

Do you provide ongoing support?

Yes. We offer maintenance packages after deployment. We don't disappear after launch—we're invested in your long-term success.

What makes you different from larger consultancies?

Senior architects only. No junior handoffs. No account managers. The person you meet is the one building your system. We take 4-6 clients at a time to ensure quality.

What technologies do you work with?

Python, TypeScript, React, Node.js, AWS, TensorFlow, PyTorch for AI/ML. We choose the right tool for the job, not whatever's trendy.

Let's Discuss Your Challenge

30-minute call with a senior architect. No sales pitch—just technical perspective on your specific needs.

Available Hours: 6:00 AM - 12:00 PM EST / 4:30 PM - 10:30 PM IST

We typically respond within 24 hours